AI now appears in nearly every enterprise data roadmap, often positioned as the next step after cloud migration and analytics modernization. As a result, organizations are investing heavily and moving quickly, launching proofs of concept, testing models, and building demos that highlight what AI can do in controlled settings. But when teams try to move beyond experimentation and into production, progress slows. AI remains something that is demonstrated rather than something the business consistently relies on.

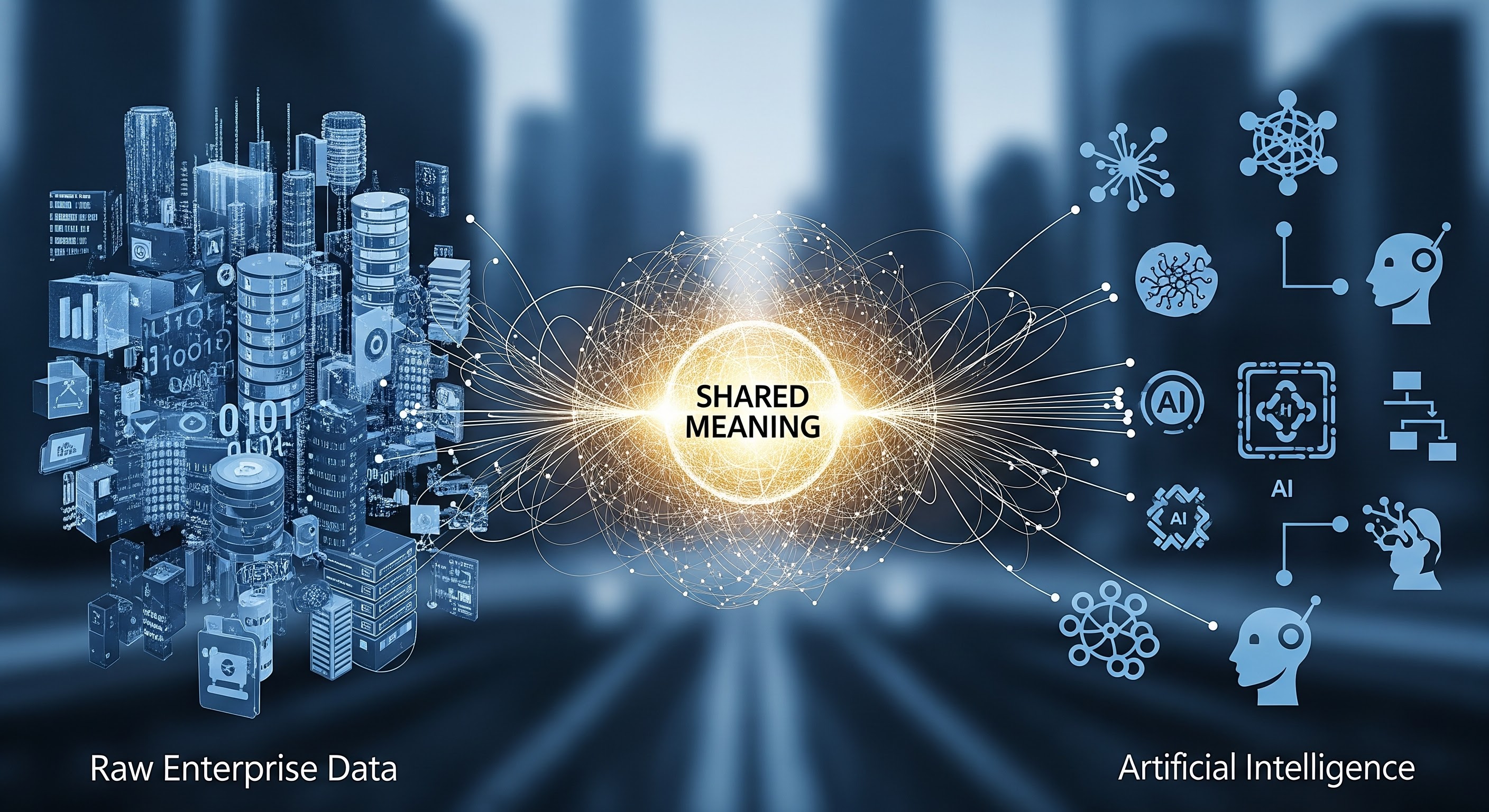

This stall is not the result of insufficient ambition, funding, or technical capability. It reflects a deeper issue: most enterprise systems were never designed to support how AI actually works. AI does not operate on infrastructure alone, it operates on meaning.

In this article, we examine why modern data stacks fail to support AI at scale, how the absence of shared semantics undermines trust and explainability, and what organizations can change to make AI reliable in real-world production environments.

AI Operates on Meaning, Not Infrastructure

AI systems do not understand warehouses, pipelines, dashboards, or schemas in the way humans do. They reason about concepts, relationships, and context. For AI to produce reliable, repeatable outcomes, it needs a stable semantic foundation to operate on. Without shared meaning, AI can generate output but cannot generate understanding. This is why humans must explicitly define meaning through modeling before machines can use it.

Modernization Alone Doesn’t Make Your Data AI-Ready

Many organizations assume that modernizing their data stack automatically makes them ready for AI. In practice, modernization often moves complexity without resolving it. Common blockers include the absence of a unified semantic layer, business and technical teams defining the same concepts differently, legacy warehouses encoding outdated assumptions, fragmented or stale metadata, and tools that attempt to automate chaos instead of structuring it. In this environment, AI doesn’t solve problems, it exposes them.

There is a growing belief that AI can infer semantics on its own, that models can “figure out” what data means if given enough examples. In practice, AI reflects existing structure, it doesn’t invent shared understanding. When meaning is unclear, AI amplifies ambiguity. AI can accelerate work, but it cannot replace the human work of deciding what things mean.

When teams explicitly model their domains, capturing concepts, relationships, and assumptions, they create a foundation AI can reliably build on. The most effective data teams are leveraging AI to exemplify human effort after the semantic layer has been solidified.

Signs Your Data Isn’t AI-Ready

When the same terms mean different things across teams, AI has no stable frame of reference. A “customer,” “revenue,” or “risk” metric may appear consistent on the surface but represent different assumptions depending on the system or function using it. AI trained on this fragmented meaning produces outputs that look reasonable in isolation yet conflict when applied across the business, undermining confidence at scale.

This is the gap Ellie is designed to address. By providing a shared modeling layer where domains, concepts, and metrics are defined once in clear business terms, Ellie.ai gives AI a stable semantic frame of reference. Instead of learning from fragmented interpretations across systems, AI operates on a single, explicitly agreed-upon definition of meaning.

Business context often lives in conversations, slide decks, and informal documentation, while technical meaning is encoded in pipelines, schemas, and transformation logic. Because these perspectives rarely converge in a shared system, AI inherits the gap. Models learn from implementation details without business nuance, leaving stakeholders struggling to interpret or trust the results.

Ellie.ai brings business and technical teams into the same modeling environment, allowing context, intent, and structure to be defined together rather than translated across documents and handoffs. When meaning is co-created instead of inferred, AI inherits both business nuance and technical precision, reducing misinterpretation before it reaches production.

Warehouses and pipelines frequently hard-code definitions that once reflected the business but no longer do. As products evolve, regulations change, and operating models shift, those assumptions remain buried in transformation logic. AI trained on top of these systems scales yesterday’s understanding at today’s speed, reinforcing decisions the business may no longer agree with.

By separating semantic meaning from physical implementation, Ellie.ai makes assumptions explicit and changeable without requiring immediate rewrites of legacy systems. This allows AI to scale current business understanding rather than reinforcing outdated logic embedded deep in transformation pipelines.

Many organizations have metadata catalogs and governance tools, but definitions are often incomplete, stale, or disconnected from execution. Without trusted semantics, metadata explains how data moved, not what it means. AI systems relying on this metadata become harder to govern and explain, increasing manual validation instead of reducing it.

Ellie.ai treats semantics as the source of truth for metadata, ensuring definitions stay connected to domains, ownership, and relationships as systems evolve. When metadata reflects explicit meaning instead of inferred behavior, AI outputs become easier to govern, explain, and trust.

In environments without stable semantics, AI often looks powerful but behaves unpredictably. This fragility is not a model problem; it’s a semantic one. Without shared meaning, AI cannot earn trust. When AI operates within clearly defined semantic boundaries, its behavior becomes predictable and explainable. Ellie.ai provides those boundaries by anchoring AI to durable, shared definitions, turning AI from a fragile demonstration tool into something the business can confidently rely on in real decisions.

What “AI-Ready” Actually Means

Being AI-ready doesn’t mean deploying larger models or adding more automation. It means creating shared meaning that systems can rely on. AI-ready organizations have explicit domain models, shared definitions that persist across systems, and a semantic layer that connects people, data, and execution. In these environments, AI doesn’t guess, it operates within boundaries the organization understands and trusts.

How to Make Your Data AI-Ready

AI readiness starts with people, not models. Teams must explicitly define domains, concepts, and relationships in business language before introducing automation. When meaning is established upfront, AI has a stable foundation to work from instead of guessing intent after the fact.

Semantics should not live in slide decks, wikis, or side documents. They need to be embedded in systems so that meaning is inherited consistently across pipelines, analytics, and AI workflows. When semantics are part of the infrastructure, alignment scales with execution.

AI is most effective when it builds on shared understanding rather than trying to infer it. Once semantics are clear, AI can suggest improvements, generate metadata, accelerate validation, and support intelligent agents. Its role is to amplify human decisions, not replace them.

AI-ready systems assume that meaning will evolve. Definitions change, domains expand, and assumptions shift. The right foundation makes these changes explicit and traceable, allowing teams to adapt without breaking downstream systems or losing trust.

In a semantics-first environment, AI becomes genuinely powerful. It can extend models intelligently, generate and maintain metadata, accelerate validation, support intelligent agents, and enable scalable modernization across systems. In this role, AI acts as an amplifier of clarity rather than a substitute for understanding.

Build Your Semantic Layer with Ellie.ai

Ellie.ai was built to make shared meaning explicit before execution by providing businesses and technical teams with a single environment to define domains, concepts, and relationships together so AI systems operate on agreed understanding rather than inferred assumptions.

This is what being truly AI ready requires. Not more automation or faster pipelines, but durable semantics that scale with the business. Without shared meaning, AI only accelerates confusion. With it, AI operates with context the organization can trust. Get your free Ellie.ai trial today.